The SaaS Trap: Why Your Data Belongs in a Cage You Don't Control

The SaaS revolution promised liberation. No more servers to maintain, no more software to install, no more upgrade cycles to manage. Just log in and get to work.

That promise was real. But somewhere along the way, the trade-offs stopped being discussed.

We've spent years working with enterprise GRC tools, and we've watched a pattern emerge: organizations trade operational convenience for something far more valuable, control over their own data. In the AI era, that trade is becoming untenable.

The toy box analogy

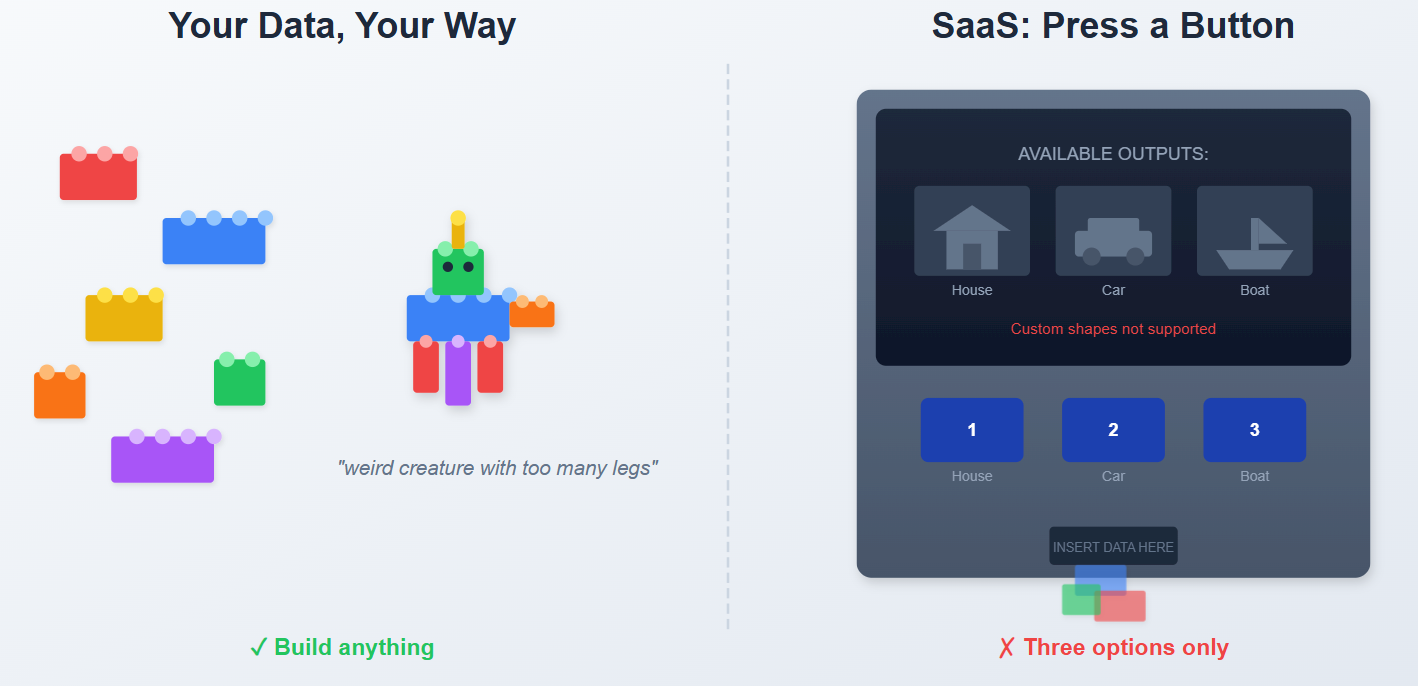

Imagine you're five years old. You have a box of LEGO bricks. You can build whatever you want, a house, a spaceship, a weird creature with too many legs. Your imagination is the only limit.

Now imagine someone takes your LEGOs and puts them in a special machine. The machine can only build three things: a house, a car, or a boat. Press button 1 for house. Button 2 for car. Button 3 for boat. The machine is easier to use, sure. But your weird creature? Impossible.

That's SaaS.

The machine is convenient. It works every time. But the moment you want something it wasn't designed for, you're stuck. Your LEGOs are inside, but you can't touch them.

The benefits are real, and insufficient

Let's be fair. SaaS delivers genuine value:

| Benefit | Reality |

|---|---|

| No infrastructure | True. No servers to patch. |

| Automatic updates | True. Features appear without IT projects. |

| Accessibility | True. Work from anywhere with a browser. |

| Reduced IT burden | True. Someone else handles uptime. |

These aren't trivial advantages. For many organizations, they're table stakes.

But here's the problem: each of these benefits comes bundled with constraints that become increasingly painful as your needs mature.

No infrastructure → But your data lives on someone else's servers, in someone else's data center, subject to someone else's security posture.

Automatic updates → But updates happen on the vendor's schedule. That report you relied on? Changed without warning. That workflow you built? Deprecated.

Accessibility → But "anywhere" means "anywhere the vendor's servers are reachable." Outages aren't hypothetical, they're quarterly events for most major platforms.

Reduced IT burden → But increased vendor dependency. When something breaks, you open a ticket and wait. Your business timeline is irrelevant.

The four walls of the SaaS prison

1. The dashboard dictates reality

You see what the vendor decided you should see.

Need a different view? Submit a feature request. Maybe it ships in two years. Maybe it gets deprioritized. Maybe you're told it conflicts with "product vision."

In the meantime, you export to Excel. Manually. Every time.

We've seen audit teams spend days reformatting GRC tool exports because the built-in reports don't match their framework. The data exists. It's right there. But the tool won't show it the way auditors need to see it.

2. Processing happens their way

Every SaaS platform makes assumptions about how you'll use your data. Those assumptions are baked into the processing logic.

Want to filter by a field the vendor didn't anticipate? Too bad. Want to combine data from two modules that weren't designed to talk to each other? Build a workaround. Want to apply your own risk scoring algorithm? That's "customization", please contact sales.

The data is yours. The processing is theirs. And those two facts are in constant tension.

3. Integration is a revenue center

Modern enterprises don't run on one system. They run on dozens, connected by APIs and data flows.

SaaS vendors know this. Which is why API access is often a premium tier feature. Why webhook limits exist. Why "integration partnerships" require separate contracts.

Your data should flow where you need it. Instead, it flows where the vendor's pricing model allows.

4. Exit costs are features, not bugs

Try to leave a SaaS platform after three years. Your data is in there, but in what format? With what structure? Compatible with what replacement?

The harder it is to export your data in a usable form, the more likely you are to stay. Vendors understand this. Many optimize for it.

Why this matters more in the AI era

The shift is simple: AI agents don't need dashboards. They need raw data.

When you ask Claude or GPT to analyze your access risks, it doesn't care about your GRC tool's visualization layer. It needs structured data, JSON, CSV, something it can parse and reason about.

But most SaaS platforms are designed around human interfaces, not machine interfaces. The dashboard is the product. The underlying data is an afterthought.

This creates an absurd situation: you have AI tools that can do real analysis, but your data is trapped behind interfaces designed for manual clicking.

| What AI Needs | What SaaS Provides |

|---|---|

| Structured, complete data exports | Paginated, filtered reports |

| Consistent schemas | Format changes with updates |

| API-first access | Dashboard-first design |

| Raw data for custom processing | Pre-processed, aggregated views |

The SaaS model was built for humans navigating menus. The AI era requires machines consuming data. Those are different requirements.

The visualization irony

Why does your SaaS tool need built-in visualizations at all?

Your organization already has:

- Power BI, Tableau, or Looker for business intelligence

- Data science teams with Python and R notebooks

- AI assistants that can generate charts on demand

- Established reporting standards and templates

When your GRC data is locked in a proprietary visualization layer, you're forced to maintain parallel reporting tracks. One set of reports from the tool. Another set rebuilt in your BI platform because stakeholders need integrated views.

Every hour spent wrestling data out of a SaaS dashboard is an hour not spent on actual analysis.

What data ownership actually looks like

Control isn't about avoiding SaaS entirely. It's about whether you can retrieve your complete data, move it between tools, and apply your own logic to it, without asking permission.

That's not a radical demand. It's the baseline that got lost somewhere in the SaaS transition.

How we approached this at MTC Skopos

We built MTC Skopos around a different philosophy: prepare the data, then get out of the way.

Your data never leaves your infrastructure

To be clear: your SAP authorization data never leaves your network. Ever.

MTC Skopos runs as a portable desktop application. There's no cloud component, no data transmission, no third-party storage. No vendor has access to your user assignments, role structures, or transaction logs.

This matters. SAP authorization data is a blueprint of who can do what in your most critical business systems. It reveals organizational structure, sensitive access patterns, and potential attack vectors. CISOs in regulated industries know this, which is why many security teams refuse to send this data to external SaaS platforms, regardless of compliance certifications or contractual assurances.

With MTC Skopos, that conversation never happens. The data stays where it belongs: under your control, on your infrastructure, subject to your security policies.

Built for the AI era

MTC Skopos was designed for AI-powered analysis from day one. Three modes of integration, same idea: your data, structured for machines to reason about.

Mode 1: Structured Exports

Every analysis exports to structured JSON. Not as an afterthought, as a primary output format designed for machine consumption.

{

"user": "JSMITH",

"risk_id": "F071",

"conflicting_access": {

"function_1": {

"transactions": ["FB01", "FB02"],

"execution_count": 147,

"source_roles": ["ZS:FI:AP-PROCESS:C"]

},

"function_2": {

"transactions": ["F110"],

"execution_count": 0,

"source_roles": ["ZS:FI:AP-PAYMENTS:C"]

}

}

}

Copy this into Claude, ChatGPT, or any AI assistant. Ask questions. Get analysis. No data transformation required.

Mode 2: MCP Server Integration

For deeper integration, MTC Skopos includes a Model Context Protocol (MCP) server. This connects your analysis data directly to AI agents like Claude, so you can query your SoD results conversationally:

"Which users have the highest-risk conflicts based on actual execution this quarter?" "Show me all payment-related SoD violations where both functions were executed in the same week." "Generate a remediation plan prioritized by business impact."

The AI queries your data in real-time. No exports, no copy-paste, no context switching.

Mode 3: Local LLM for Zero Cloud Exposure

For organizations that want AI capabilities but refuse any cloud data exposure, the MCP server works equally well with local LLMs like Ollama or LM Studio. Your authorization data stays on your infrastructure. Your AI queries stay on your infrastructure. Complete analysis capability, zero external data transmission.

No visualization lock-in

We deliberately don't embed complex dashboarding. MTC Skopos gives you analysis results and clean data. You visualize it however makes sense for your organization.

Want Power BI dashboards that match your other security reporting? The data's available. Want your AI assistant to generate ad-hoc charts during a meeting? Feed it the JSON. Want to build custom risk scores using your own methodology? The underlying data supports it.

The tool prepares and structures your data. What you do with it next is your choice.

Processing you control

The analysis engine runs locally. You can adjust parameters, rerun with different criteria, iterate on remediation scenarios, without API limits, without waiting for server response, without worrying about rate throttling.

More importantly, you can inspect the outputs. Understand how results were generated. Validate against your own expectations. There's no black box.

The practical difference

Consider a typical access risk remediation project:

Traditional SaaS approach:

- Log into the GRC platform

- Navigate to the right report (hope you remember where)

- Set filter parameters

- Run the report (wait for processing)

- Export results (partial data, paginated)

- Reformat for analysis

- Feed to AI or other tools

- Lose context because the export didn't include everything you need

- Go back to step 2 with different parameters

- Repeat until frustrated

MTC Skopos approach:

- Export SAP data (one-time setup)

- Run analysis (minutes)

- Export complete results to JSON

- Feed to AI, BI tool, or custom processing

- Iterate as needed

The difference isn't just speed. It's that your data remains accessible throughout the entire process. You're never waiting for permission, never fighting export limitations, never trying to reconstruct context that got lost in a filtered report.

What this enables

When data flows freely, new workflows become possible.

Feed your complete SoD analysis to Claude and ask for prioritization based on usage patterns and business context. The AI can reason about thousands of conflicts in seconds, if it has the data.

Combine authorization risks with other security metrics in your BI platform. Build executive dashboards that show the full picture, not siloed views from disconnected tools.

Apply your organization's specific risk framework. Weight conflicts differently based on business unit, regulatory exposure, or transaction materiality.

Test different remediation approaches. Compare "what if we prioritize financial risks" against "what if we focus on high-activity users." Run dozens of scenarios in the time it takes to generate one SaaS report.

The bottom line

SaaS delivered real benefits: easier deployment, less infrastructure work, continuous updates. Those advantages haven't disappeared.

But the model also created dependencies that become increasingly costly as organizations mature. Rigid visualizations. Limited processing control. Export constraints. Exit barriers.

In an AI-enabled world, these constraints matter more than ever. AI agents can do real analysis, if they can access your data. Trapped behind dashboard-first interfaces, that data might as well not exist.

The question isn't SaaS versus on-premise. It's whether your tools give you control over your own data, or whether your data exists primarily to power someone else's platform.

We built MTC Skopos for organizations that want the second answer.

Ready to see what data ownership looks like in practice? Learn more about MTC Skopos or contact us for a demonstration.

Related articles

- AI and the Future of GRC Consulting - Why tool complexity is becoming a liability

- AI-Powered SoD Remediation with MCP - Deep dive into MCP server integration

- Best SoD Tools & Software Comparison Guide - Evaluating the market landscape